Assistive technologies such as Amazon Alexa, Apple Siri or Google Lens have revolutionized the way we interact with computers and truly assist us in our daily life. However, they are still not perfect because users’ data has to travel out from their smartphone to providers’ cloud. This may cause response delays for several reasons, it requires high-speed internet connections, and last but not least, it increases the risk of getting hacked, scandalized or abused. These advanced artificial intelligence systems work based on deep-learning, which is computationally very heavy and normally requires Graphical Processing Unit (GPU) servers.

But, is there any way to run deep-learning locally on smartphones without sending the data to the cloud?

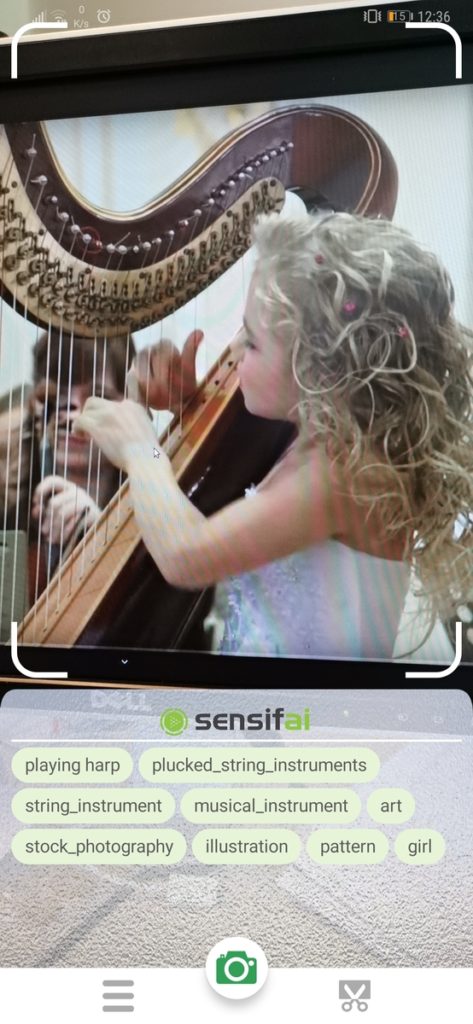

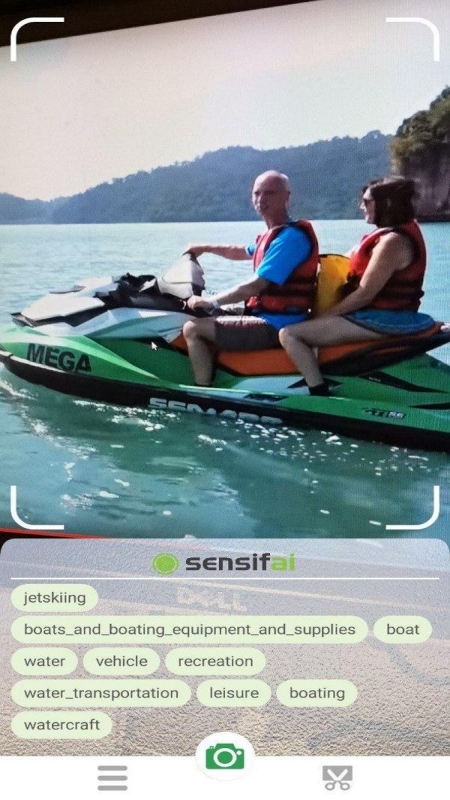

Recently, a Belgium-based startup, namely Sensifai, launched a smartphone app that runs deep-learning for real-time video recognition. This video recognition app understands over 3000 different concepts including objects, scenes, attributes and even human actions with a processing speed of over 30 frames per second on a live video feed from the smartphone camera. The power consumption of the app is less than 150mA, which is only 10% of what Google map uses. This lets users run the app for hours and hours without any battery concerns. Some examples of the app results are shown in this screen recording video.

Sensifai says that the next generation of the app will be running on all smartphones with recent chipsets from Huawei (Kirin 980 and 990), Qualcomm (Snapdragon 845 and 855) and MediTek (Helio P90) and will recognize up to 10,000 different real-world concepts with a processing speed of over 50 frames per second.

Compared to conventional cloud-based video/image recognition apps, this new technology is truly realtime since it removes (1) the delays due to a lousy internet connection for uploading/downloading data to/from the provider’s cloud or (2) the delays caused by cueing the simultaneous queries from millions of users in a central cloud-based processor. The new app processes the data locally on the smartphone without any need to the internet and each query is processed right in the users’ smartphone, i.e. a fully distributed computation in the users’ devices as opposed to a central cloud-based processor.

This app also shows that many other apps such as Alexa or Siri can also run offline on users devices and not only deliver more performance in terms of speed and accuracy but also attract users who fear to share their personal data with service-providers and get exposed to modern cyber threats.

Since the new technology is highly energy efficient, small and light as compared to their GPU-based counterparts, it can be a breakthrough enabling many industries to run real-time image, audio and video processing tasks in the device. For example, the automobile industry can use this technology in their driver assistant or self-driving cars, robots can interact with the surrounding environment and human in a natural way, drones can perform visual pattern recognition, and assistants such as Alexa or Siri can do the speech recognition right on the device instead of transmitting user data to the cloud.